Most people talk too much about values relative to facts, as they care more about showing off their values than about learning facts. So I usually avoid talking values. But I’ll make an exception today for this value: expanding rather than fighting about possibilities.

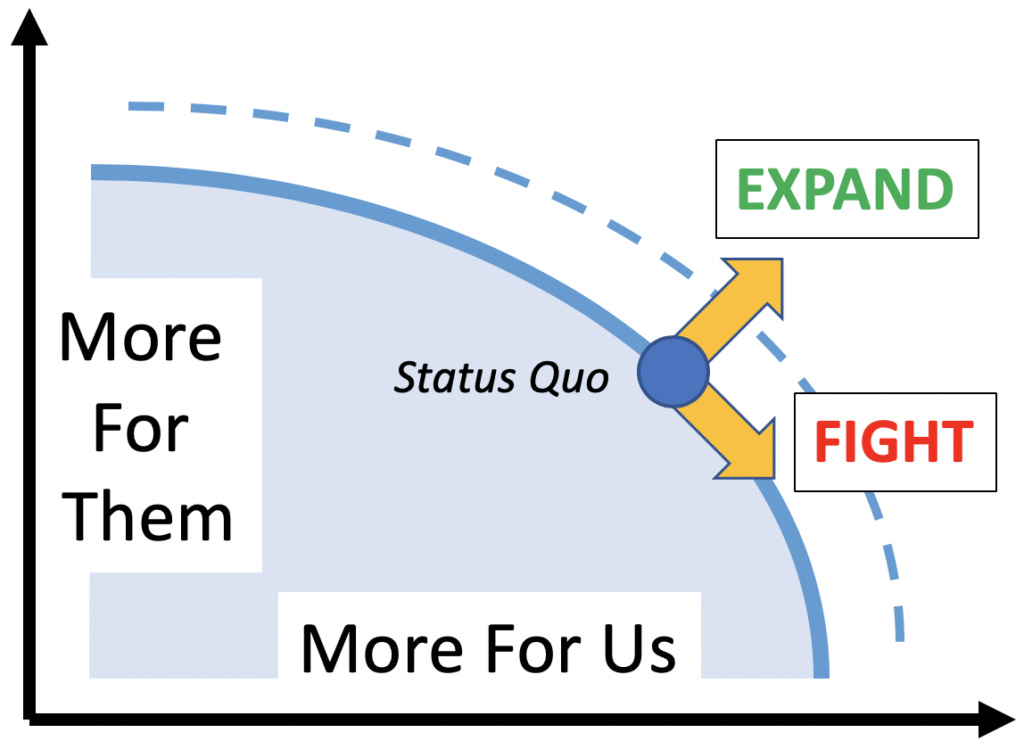

Consider the following graph. On the x-axis you, or your group, get more of what you want. On the y-axis, others get more of what they want. (Of course each axis really represents a high dimensional space.) The blue region is a space of possibilities, the blue curve is the frontier of best possibilities, and the blue dot is the status quo, which happens if no one tries to change it.

In this graph, there are two basic ways to work to get more of what you want: move along the frontier (FIGHT), or expand it (EXPAND). While expanding the frontier helps both you and others, moving along the frontier helps you at others’ expense.

All else equal, I prefer expanding over fighting, and I want stronger norms for this. That is, I want our norms to, all else equal, more praise expansion and shame fighting. This isn’t to say I want all forms of fighting to be shamed, or shamed equally, or want all kinds of expansion to get equal praise. For example, it makes sense to support some level of “fighting back” in response to fights initiated by others. But on average, we should all expect to be better off when our efforts are on averaged directed more toward expanding than fighting. Fighting should be suspicious, and need justification, relative to expansion.

This distinction between expanding and fighting is central to standard economic analysis. We economists distinguish “efficiency improving” policies that expand possibilities from “redistribution” policies that take from some to give to others, and also from “rent-seeking” efforts that actually cut possibilities. Economists focus on promoting efficiency and discouraging rent-seeking. If we take positions on redistribution, we tend to call those “non-economic” positions.

We economists can imagine an ideal competitive market world. The world we live in is not such a world, at least not exactly, but it helps to see what would happen in such a world. In this ideal world, property rights are strong, we each own stuff, and we trade with each other to get more of what we want. The firms that exist are the ones that are most effective at turning inputs into desired outputs. The most cost-effective person is assigned to each job, and each customer buys from their most cost-effective supplier. Consumers, investors, and workers can make trades across time, and innovations happen at the most cost-effective moment.

In this ideal world, we maximize the space of possibilities by allowing all possible competition and all possible trades. In that case, all expansions are realized, and only fights remain. But in other more realistic worlds many “market failures” (and also government failures) pull back the frontier of possibilities. So we economists focus on finding actions and policies that can help fix such failures. And in some sense, I want everyone to share this pro-expansion anti-fight norm of economists.

Described in this abstract way, few may object to what I’ve said so far. But in fact most people find a lot more emotional energy in fights. Most people are quickly bored with proposals that purport to help everyone without helping any particular groups more than others. They get similarly bored with conversations framed as collecting and sharing relevant information. They instead get far more energized by efforts to help us win against them, including conversations framed as arguing with and even yelling at enemies. We actually tend to frame most politics and morality as fights, and we like it that way.

For example, much “social justice” energy is directed toward finding, outing, and “deplatforming” enemies. Yes, when social norms are efficient, enforcing such norms against violators can enhance efficiency. But our passions are nearly as strong when enforcing inefficient norms or norm-like agendas, just as a crime dramas are nearly as exciting when depicting the enforcement of bad crime laws or non-law vendettas. Our energy comes from the fights, not some indirect social benefit resulting from such fights. And we find it way too easy to just presume that the goals of our social factions are very widely shared and efficient norms.

Consider fertility and education. Many people get quite energized on the topic of whether others are having too many or not enough kids, and on whether they are raising those kids correctly. We worry about which nations, religions, classes, intelligence levels, mental illness categories, or political allegiances are having more kids, or getting more kids to be educated or trained in their favored way. And we often seek government policies to push our favored outcomes. Such as sterilizing the mentally ill, or requiring schools to teach our favored ideologies.

But in an ideal competitive world, each family picks how many kids to have and how to raise them. If other people have too many kids and and have trouble feeding them, that’s their problem, not yours. Same for if they choose to train their kids badly, or if those kids are mentally ill. Unless you can identify concrete and substantial market failures that tend to induce the choices you don’t like, and which are plausibly the actual reason for your concerns here, you should admit you are more likely engaged in fights, not in expansion efforts, when arguing on fertility and education.

And it isn’t enough to note that we are often inclined to supply medicine, education, or food collectively. If such collective actions are your main excuse for trying to control other folks’ related choices, maybe you should consider not supplying such things collectively. It also isn’t enough to note the possibility of meddling preferences, wherein you care directly about others’ choices. Not only is evidence of such preferences often weak, but meddling preferences don’t usually change the possibility frontier, and thus don’t change which policies are efficient. Beware the usual human bias to try to frame fighting efforts as more pro-social expansion efforts, and to make up market failure explanations in justification.

Consider bioconservatism. Some look forward to a future where they’ll be able to change the human body, adding extra senses, and modifying people to be smarter, stronger, more moral, and even immortal. Others are horrified by and want to prevent such changes, fearing that such “post-humans” would no longer be human, and seeing societies of such creatures as “repugnant” and having lost essential “dignities”. But again, unless you can identify concrete and substantial market failures that would result from such modifications, and that plausibly drive your concern, you should admit that you are engaged in a fight here.

It seems to me that the same critique applies to most current AI risk concerns. Back when my ex-co-blogger Eliezer Yudkowsky and I discussed his AI risk concerns here on this blog (concerns that got much wider attention via Nick Bostrom’s book), those concerns were plausibly about a huge market failure. Just as there’s an obvious market failure in letting someone experiment with nuclear weapons in their home basement near a crowded city (without holding sufficient liability insurance), there’d be an obvious market failure from letting a small AI team experiment with software that might, in a weekend, explode to become a superintelligence that enslaved or destroyed the world. While I see that scenario as pretty unlikely, I grant that it is a market failure scenario. Yudkowsky and Bostrom aren’t fighting there.

But when I read and talk to people today about AI risk, I mostly hear people worried about local failures to control local AIs, in a roughly competitive world full of many AI systems with reasonably strong property rights. In this sort of scenario, each person or firm that loses control of an AI would directly suffer from that loss, while others would suffer far less or not at all. Yet AI risk folks say that they fear that many or even most individuals won’t care enough to try hard enough to keep sufficient control of their AIs, or to prevent those AIs from letting their expressed priorities drift as contexts change over the long run. Even though such AI risk folks don’t point to particular market failures here. And even though such advanced AI systems are still a long ways off, and we’ll likely know a lot more about, and have plenty of time to deal with, AI control problems when such systems actually arrive.

Thus most current AI risk concerns sound to me a lot like fertility, education, and bioconservatism concerns. People say that it is not enough to control their own fertility, the education of their own kids, the modifications of their own bodies, and the control of their own AIs. They worry instead about what others may do with such choices, and seek ways to prevent the “risk” of others making bad choices. And in the absence of identified concrete and substantial market failures associated with such choices, I have to frame this as an urge to fight, instead of to expand the space of possibilities. And so according to the norms I favor, I’m suspicious of this activity, and not that eager to promote it.

Democrats put minorities at a disadvantage

"If such collective actions are your main excuse for trying to control other folks’ related choices, maybe you should consider not supplying such things collectively..."

This. If only we could do this.

From a totally self centered point of view we seem to be doing everything right in order to get ahead. No children (by choice), double income, steady jobs, look after ourselves (health, exercise, no smoking/doping), live frugally, save (for retirement)...

And get taxed to death.

To support "the other". Yes some of the tax goes to actual governance, but there is no denying that larger and larger portions goes to "social spending".

If it weren't for excess taxes (the part in the budget that supports the other) we would be able to retire right now.

Now I get that one can argue, if not for state education masses will go uneducated and turn to crime (argument for expanding?). And this is where people turn to the options re: education/population you mention. No one is an island

I see that there is very little in it for people like myself to gain by expanding...?

What about the silent man?